The “Generative Pre-trained Transformer 3”, or GPT-3 for those in the know, is the new kid on the Natural Language Processing block. In this article, we will look at GPT-3 and its potential applications in finance.

GPT-3 was released on the 22nd of July 2020 by Open-AI, the non-profit (soon for-profit) organisation backed by Elon Musk. You can find the original paper here. The purpose of all transformers (the class of models that GPT-3, but also BERT, belong to) is to take an input, and generate a written output. For instance, given the beginning of a sentence, the model outputs a few plausible consecutive words and then reuses all of that (initial input + generated words) as the new input to go even further in the sentence. The objective in this text generation task is to minimise the surprise one can feel when reading the output.

Practice makes perfect, especially with money

In order to do that, these models have to be trained on a colossal set of text, crawled and scraped from the internet. GPT-3 was trained on around half a trillion words, equivalent to reading The Hobbit and the Lord of the Rings trilogy a million times.

According to this website it would roughly take 28 million hours for a human to read all of that, just over 31 centuries. But reading is one thing, you also need to be able to remember it. This is why GPT-3 has 175 billion parameters which can (very) loosely be related to a synapse in the human brain. Each of them has to be trained: not only training the model takes time, but it requires a lot of money (in electricity and components) — an estimated $12 million according to VentureBeat.

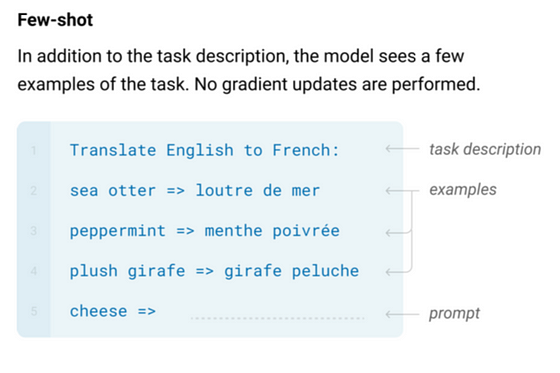

Fortunately, people like us — not backed by a famous billionaire — do not have to train the model themselves. We can take the already trained (or rather pre-trained) model and tailor it to the task at hand. Usually, it is done with fine-tuning, training the model a little more on the new task. But GPT-3 does it differently: you just have to show it a few times (few-shot) what you want and it works. Forbes recently released a good overview article on GPT-3 highlighting some of its general uses and drawbacks.

A revolution for finance?

For relevant applications in finance, there are some exciting use-cases that have the potential to redefine decision-making. The ability to generate sentiment analysis and infer correlations with market signals from large volumes of text has improved significantly, meaning faster (even automated) decisions for hedge funds and trading desks. Due to the large volume of training data, it will now be easier to auto-translate non-English company accounts, potentially even opening up new opportunities in global markets.

Example from the original paper

Likewise, given enough new textual data and outcomes it may be possible to predict with a high degree of accuracy the outcome of a previous lending decision based on the written analysis done at the time, as well as conditions to that approval. It’s only a guide, but would act as a powerful assistant to future decision-making.

Unfortunately when it comes to generating written language, there are very few quick wins in finance. If text generation is not anchored to the underlying data, the margin for error increases significantly. There are some interesting use cases in adding context around algorithmic analysis, but only where models are appropriately trained on top of GPT-3. And this is where GPT-3 trips and falls: it only cares that the content looks like it is generated by a human, not whether it is fundamentally correct. For instance, asking it what is heavier, a pencil or a toaster, its answer is:

“A pencil is heavier than a toaster.”

GPT-3, like the internet, is biased

GPT-3 has basically been trained on the whole internet, so it contains both the good and the bad. This article analyses the model from the point of view of biases. This is a potential drawback for applications such as generating written counter-arguments for M&A proposals. The model may naturally be more lenient to some companies than others, where impacted by the trained bias.

This is why it ’s critical for large organisations to coordinate both domain and technology experts. With a sole technical focus, the subtleties will not be picked up and these make a big difference. More importantly, leveraging the talent of technical teams helps to position organisations as leaders, as new technologies, such as GPT-3, can be quickly applied to existing problems. We have recently released an article about applying AI in financial services, which you can read here.

GPT-3 is a big leap forward, no doubt about it. As more people experiment creatively with the model, we are sure that more possibilities for the technology will emerge. The potential is only limited by our own imagination.

At Scribe, we’re taking a research-led approach to artificial intelligence, bringing cutting edge technology into the industry and removing the friction of analysing corporates that you lend to. We see the huge potential of this technology and are working alongside Banks to deliver real, tangible AI-driven solutions.

Header image by Bruno